ChatGPT is currently the most popular Large Language Model (LLM) in the U.S., but not for long if Google gets its way.

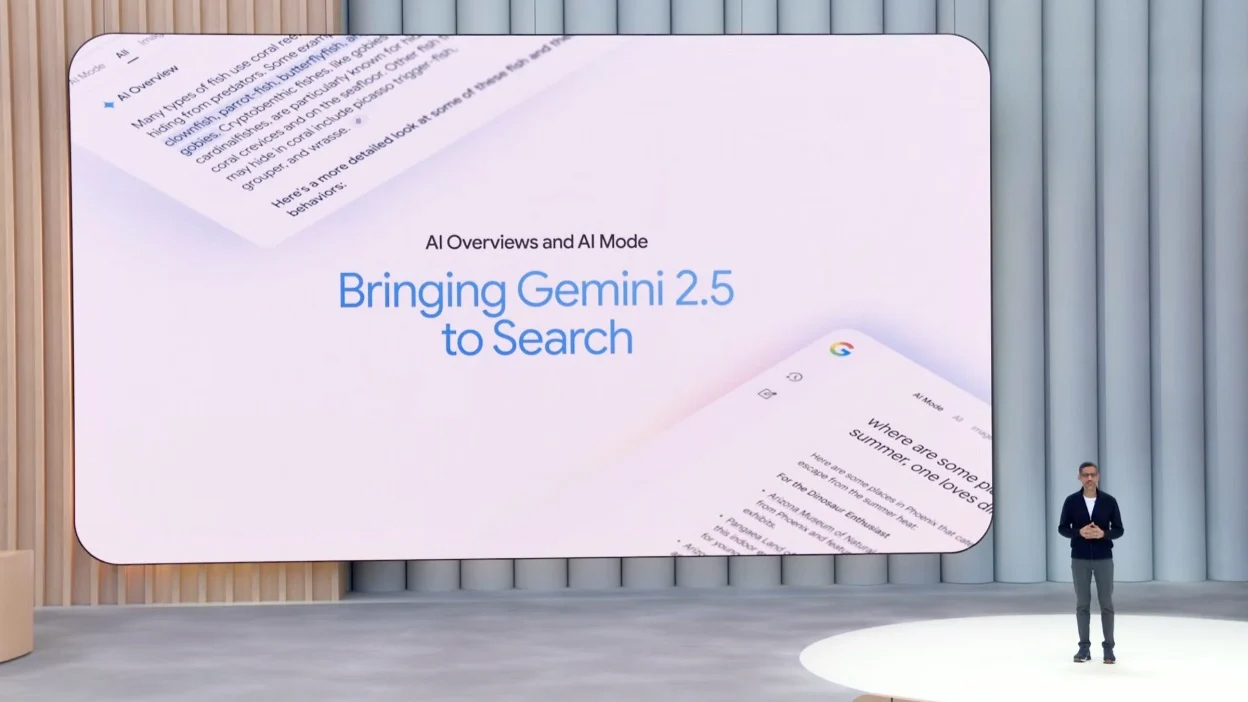

At Google I/O, its biggest developer conference of the year, the search giant outlined a future where its LLM Gemini moves into the top spot and takes household name status from ChatGPT. The conference is streamed and livestreamed here.

The Silicon Valley conference, now in its 27th year, showcases new products, services, and platform updates. This year, however, the 2-day event running May 20-21 had an AI focus, with Gemini in the starring role.

Google CEO Sundar Pichai spent two hours during opening remarks on Tuesday (May 20), turning the spotlight on upcoming AI developments, such as real-time language translation and digital assistants.

“More intelligence is available, for everyone, everywhere. And the world is responding, adopting AI faster than ever before. Over 7 million developers are building with Gemini, five times more than this time last year,” Pichai said.

Search Reimagined With AI Mode

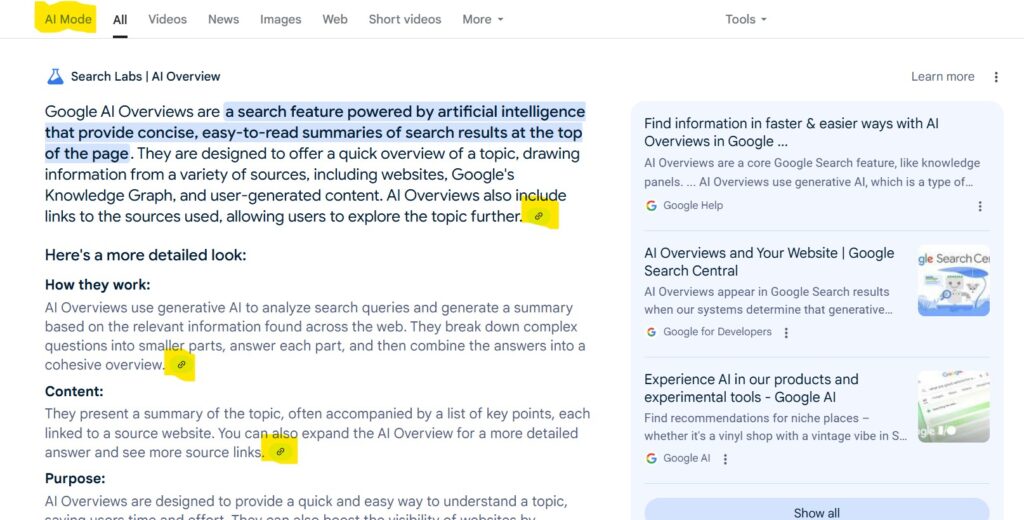

Google has about 90% of the search market, a percentage being threatened not by other search engines, but by LLMs being used instead. Google rolled out AI Overviews at last year’s conference, and this year, it’s bringing the U.S. market the research links to go with those overviews.

AI Overviews have scaled to over 1.5 billion users and are now in 200 countries and territories, Google said. Feedback from power users said they want a tool that is akin to an end-to-end AI Search experience,” Elizabeth Reid, VP of Search, said during the conference on Tuesday.

“Earlier this year we began testing AI Mode in Search in Labs, and starting today we’re rolling out AI Mode in the U.S. — no Labs sign-up required. AI Mode is our most powerful AI search, with more advanced reasoning and multimodality, and the ability to go deeper through follow-up questions and helpful links to the web. Over the coming weeks, you’ll see a new tab for AI Mode appear in Search and in the search bar in the Google app,” Reid said.

Key features, outlined on The Keyword Blog, include:

- ◾Conversational Interface: Dynamic 2-way interactions, easy follow-up questions.

◾Multimodal Input: Queries via text, voice, or images

◾Comprehensive Responses: Powered by Google’s Gemini models, different sources are combined for new, cohesive understanding

◾Personalized Results: Provides context-aware and personalized search experiences

◾Seamless Integration: Integrates information from Google’s Knowledge Graph and other services for richer results

Search will carry on a conversation, synthesize real-time data, and do things on the user’s behalf. AI Mode changes how people search while redefining the interface of the open web.

AI Mode, which will run on Gemini, will also be used in Google’s Deep Search initiative, which currently can handle complex, interdisciplinary queries. The company’s new Deep Search initiative using AI Mode will enable multiple search queries simultaneously, apply reasoning, and more.

Highlights of AI Mode include:

◾Issuing Multiple Queries: Automatically generates and processes related searches to provide comprehensive information

◾Advanced Reasoning: Uses the Gemini 2.5 AI model to synthesize detailed, nuanced answers from multiple sources

◾Expert-Level Reports: Produces in-depth reports, complete with citations, for thorough exploration of complex topics

Other Google Upgrades On The Horizon

Google said it foresees Gemini developing into a universal assistant, and AI Mode transitioning towards becoming a more agentic experience, empowering users to not only find information but also get things done. The new agentic capabilities can extract information from emails and even help users try on and buy clothes.

Here are other new products and upgrades discussed at Google I/O 25. More information and details about each can be found here:

🔮Google Flow. AI filmmaking tool created with and for creatives, the tool combines the best capabilities from Veo, Imagen and Gemini. How it works: Generated or uploaded images are customized with text prompts, turned into clips, then pieced together into a full-length video.

🔮SynthID. The tool embeds invisible watermarks into AI-generated media and its new upgrade is expanding capabilities to up its volume. An upgraded SynthID Detector is in early testing stages, with the goal of making it easier to detect these watermarks in images, audio tracks, text and video.

🔮Chrome. Expanded AI capabilities coming to Chrome, complete with a Gemini integration.

🔮Project Astra. A research prototype developed by Google DeepMind, it’s envisioned as a real-time, interactive, universal AI assistant. The first feature being introduced to everyone is its real-time video and screen-sharing capabilities, which are being integrated into Gemini Live.

🔮Project Mariner. Only available to Google AI Ultra subscribers in the U.S., this experimental AI agent was ungraded to handle up to 10 different tasks simultaneously.

🔮Android XR glasses: Capable of augmented reality including live language translation

🔮3D video calls

🔮Generative Media

🔮Real-time language translation

🔮Google Beam.

🔮Personalization

🔮AI Mode

🔮Gemini 2.5

🔮Gemini App

ChatGPT versus Gemini

By some estimates, there are over 200,000 LLMs worldwide, but hardline numbers are difficult to ascertain, since some companies consider each version an individual LLM, and new players regularly enter and exit the space. As it stands, ChatGPT and Gemini are the two most popular models, with each have areas of excellence as well as user preference.

A March survey by Morgan Stanley found that 40% of respondents used Google’s Gemini at least once a month, while 41% used ChatGPT monthly. ChatGPT was more popular among users 16 to 24 years old, with 68% opting for ChatGPT and 46% using Gemini.

The survey also showed around 35 million Americans use ChatGPT, Gemini, or Meta AI on a daily basis, and about 100 million users per platform monthly. Many users switch all three tools, leading to significant overlap.